Brainshark launches AI-powered analysis of sales reps’ self-assessment videos

The automated analysis of word choice, presentation complexity, emotions and personality complements the feedback by human supervisors.

Brainshark’s platform focuses on the training of sales reps, who often upload videos of themselves trying out elevator pitches or answering specific client questions.

The problem, Senior Product Manager Mark Yacovone told me, was that Brainshark’s client companies said reps weren’t getting feedback fast enough because of the volume of videos or their supervisors’ schedules.

This week, the Waltham, Massachusetts-based company is adding AI to its platform for the first time, in the form of a Machine Analysis engine that automatically screens the videos to provide an initial layer of feedback. The company says no one else offers this kind of automated machine analysis and feedback of self-assessment videos.

After a video has been uploaded to the Brainshark platform, the engine conducts speech-to-text transcription of the audio track. The text is then analyzed for key words or phrases the rep is supposed to employ for a given pitch or answer, as well as speaking rates, duration and other factors.

The text transcript is also analyzed for a variety of Personality Insights, including Openness, Conscientiousness, Extroversion and Agreeableness.

And there’s textual analysis of comprehension level, representing the grade level at which the rep is speaking. Yacovone noted that the proper grade level depends on the complexity of the material and the sophistication of the listener. Generally speaking, he said, supervisors are looking for reps to stay in a mid-range and avoid either being too complex or too simple.

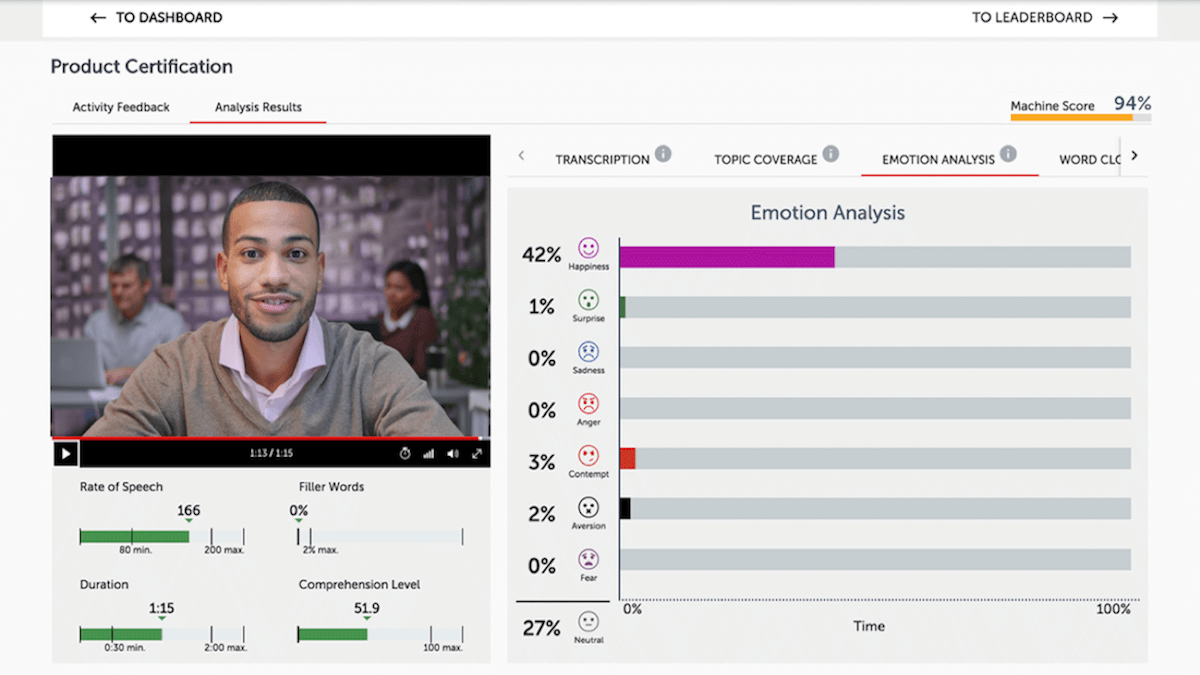

Machine Analysis also conducts facial recognition of the video itself, which is usually a headshot of a single rep, in order to generate an emotional analysis. The analysis shows a changing bar graph that tracks each of eight different emotions at each point in the video. Happiness might register as 53 percent at 10 seconds, for instance, while Surprise, Sadness, Anger, Contempt, Aversion or Fear — plus a Neutral category — register the missing percentages to fill up 100 percent. (See screen at top of this page.)

Human supervisors are still participating in providing feedback, but their comments usually follow the platform’s analysis. Yacovone said this allows the supervisors to focus first on the outliers — those who are performing spectacularly or poorly — before moving on to the ones who are simply satisfactory.

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

Related stories