Aivon launches blockchain-based protocol for ‘first decentralized video search engine’

The Singapore-based organization is launching a non-profit community composed of token-incentivized humans who validate AI-generated metadata.

Metadata is the Achilles heel of video. These shorthand textual descriptions can be employed for more detailed searching, classification or other organizing, but, when scale is required, machine-generated metadata has accuracy issues.

On the other hand, the huge volume of video on the web makes human validation prohibitively expensive, so much video searching misses a lot of what’s visually there.

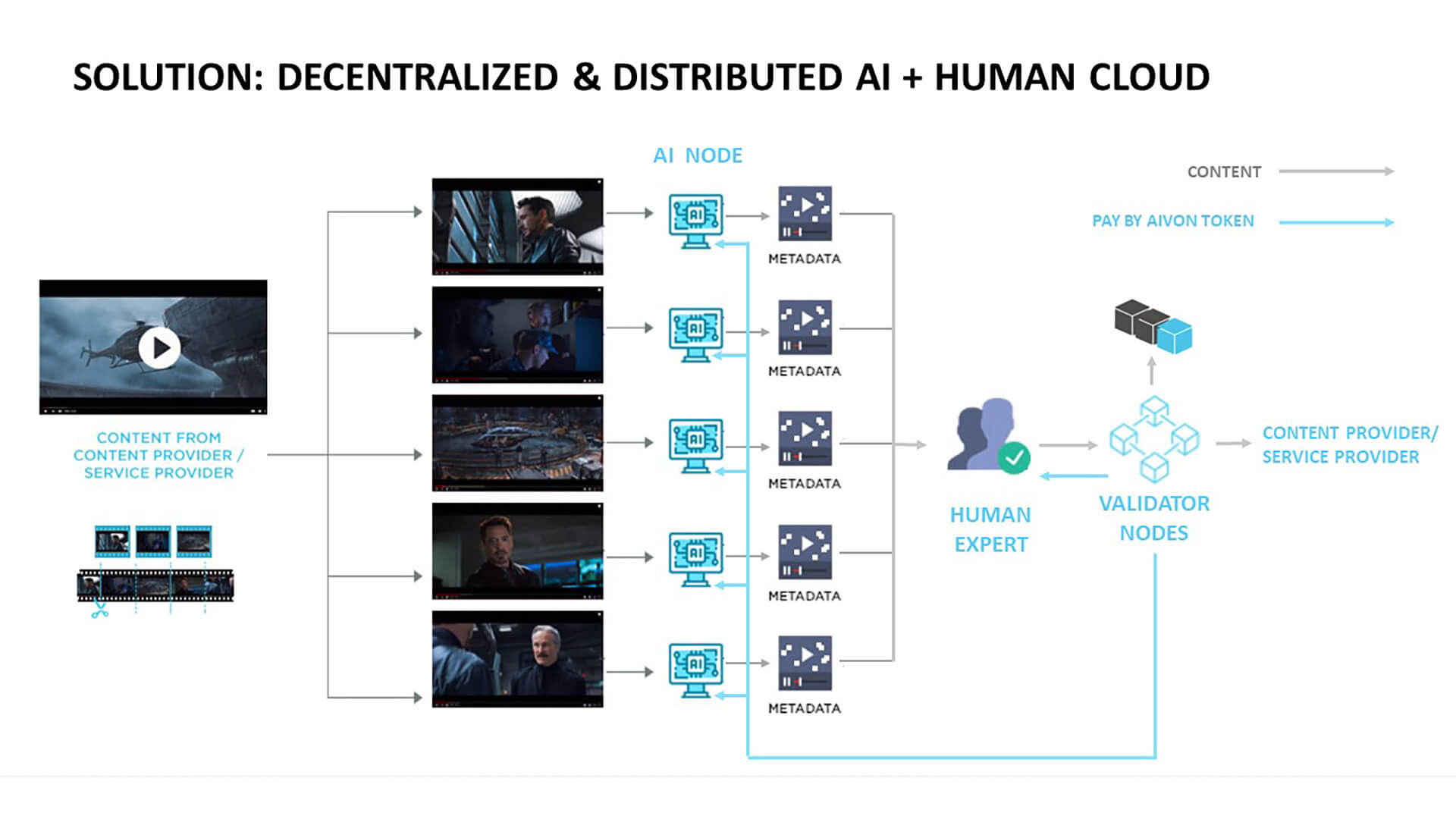

To solve this issue, a Singapore-based organization called Aivon has now launched a blockchain-based open protocol that combines AI with human crowdsourcing to support the creation and validation of text and metadata for what it describes as the first decentralized video search engine, as well as translation, transcription and brand-safety applications.

Aivon, founder and CEO Rex Wong told me, springs out of his current company, iVideoSmart, which provides white-label video and ad tech platforms for apps like VLC Player, telcos like Hutchison and media companies like Taiwan Television.

Through these clients, he says, iVideoSmart has a global, addressable user base of about 500 million. Currently, he says, about 300 of those customers are validating the metadata and other textual descriptions generated by AI for translating, transcribing and ensuring the brand safety of videos.

Those current human workers, Wong said, are being paid for their work, but they can’t scale for large applications, such as a video search engine that would need to generate accurate metadata for millions of videos. To accomplish that, Aivon is announcing that it is creating a blockchain-based ecosystem with tokens that can be used to incentivize users.

The Aivon tokens, generated via the Ethereum blockchain protocol and available in October, can be redeemed on open token exchanges or used to buy content or other products offered in the iVideoSmart platform or by Aivon community members. They can also be earned and used by content providers, advertisers, AI providers and others in the ecosystem.

Governed by a non-profit

When the tokens are added this fall, Wong said, a video search engine powered by the quality of the videos’ metadata will also be launched. AI will take a first stab at textually describing the videos, and then humans will validate or modify AI’s work.

Token-incentivized humans will also validate the existing brand safety, translation and transcription applications at a larger scale than currently. These textual descriptors are intended to provide much more detail — and therefore more value for such things as real-time product placement insertions — than conventional metatags.

This Open Community Video Search Engine, Wong said, will be governed by a non-profit foundation controlled by voting members of the Aivon community. Advertising applications using this protocol will be for-profit.

Other organizations/companies will be able to use the open-source protocol and community of incentivized human validators to build their own search engines or other decentralized apps, such as content rights management.

Given the rapid evolution of AI, Wong acknowledge that it may get well above the 90 percent mark for meta-tagging accuracy at some point in the near future.

When that happens, he said, humans won’t be needed anymore for correcting or adding to the text, but they will still be needed — indefinitely — to confirm AI’s accuracy.

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

Related stories