A new approach to measuring engagement on content-heavy sites

If no easily-identified conversions occur on your site, measuring engagement can be tricky. Columnist Nick Iyengar explains why a new approach could help you improve the quality of measurements and better understand engagement on your site.

We don’t usually think of it this way, but ecommerce sites are fairly straightforward to measure and evaluate — at least compared with their non-commerce cousins. We typically start with some basic revenue tracking and funnel data and iterate from there.

But what about websites that don’t sell products — or even have any easily-identifiable conversions that users are supposed to complete? These kinds of sites are more common than you might think.

Global brands in industries like consumer packaged goods (CPG), energy, financial services and many more manage websites where conversions are few and far between. Perhaps you can download a coupon, find a location near you, watch a video or conduct some other type of “engagement,” but many organizations don’t think of these kinds of actions as actual conversions.

Analysis in this sort of environment becomes difficult; what does “success” actually mean?

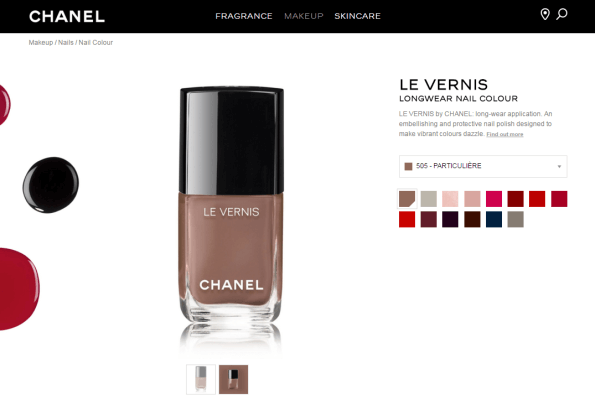

CPG brands offer us a lot of good examples of these challenges. Chanel.com lets you look at pictures of products and read about them, but not buy them directly. On a page where you’d expect to see an “Add to Cart” button, there just isn’t one.

Similarly, Unilever’s popular Axe brand has a slick website with videos, product ratings and a “Buy Now” button that seems promising… but actually serves up a list of retailers you’ll have to visit if you want to buy male grooming products that promise to help you “find your magic.”

You could say the same for many brands in the industries mentioned above. They make huge investments in maintaining a brand through their website, content shared on social media and digital advertising, but not for the purposes of ensuring you add something to a cart and check out.

Most of the time, they’re hoping to generate some kind of “engagement” that leads to outcomes down the road — perhaps greater brand affinity, an offline sale or something else.

But, as author and entrepreneur Avinash Kaushik noted on his blog almost 10 years ago, engagement isn’t a metric — “it’s an excuse.”

Indeed, organizations that focus on engagement because they don’t have true “conversions” on their site often find themselves underwhelmed by their data. Insights aren’t specific. Optimization hypotheses are based on ambiguous data.

So, in the absence of concrete conversions, how do we measure engagement in a more meaningful way? To be able to demonstrate the value of digital, organizations facing this challenge have to get creative with both the tools they use to measure success and the very definition of success itself.

I often work with clients who are wrestling with this particular issue. Recently, I worked with a global technology brand to reinvent the way they measure engagement, which has paved the way for more granular insights and higher-quality optimization recommendations.

The lessons we learned along the way can be used to improve the quality of measurement for many organizations struggling to define “engagement” meaningfully.

The challenge

The global technology company (GTC) we worked with has a website with a tremendous breadth of scope: hundreds of thousands of unique web pages, aimed at a variety of very different audiences. Individual consumers, IT executives, developers, job-seekers and investors visit the website.

With that much content and so many different constituents, it was hard to define what “engagement” really meant — especially in a way that would scale across the organization. The challenge was to help this GTC better understand how the website adds value to the organization. Ultimately, to do that, we had to redefine “success” itself.

Previously, the GTC had identified a series of site behaviors that signaled engagement: scrolling down a page, clicking on a link, starting a video, downloading a file and a variety of other actions. These actions were grouped together, and web analytics focused on how often at least one these actions occurred.

This enabled the organization to draw conclusions like this: “41 percent of sessions from this campaign were engaged” — where “engaged” meant at least one of those actions had happened. This wasn’t an unreasonable approach — it allowed the GTC to scale a solution across a massive web presence and produce data points that were easy for data consumers to understand.

However, after using this “binary” approach (i.e., engagement either happened or it didn’t) for awhile, the GTC started to ask questions that this type of measurement couldn’t quite answer. Because “engagement” was being measured as an aggregate of various potential actions, it was unclear what the term actually meant.

If 41 percent of sessions were “engaged,” what kind of engagement was actually happening? Was it the type of engagement that mattered in that context? Grouping all the engagement actions together had created a “one-size-fits-all” approach that was preventing a more nuanced understanding of engagement.

A new approach

The solution was to take the same user actions, look at their value in relation to each other, and then assign an appropriate weight to the actions. For instance, instead of lumping together page scrolls, video plays and all the other actions, we decided to track them each individually and assign them a score based on how valuable the action is determined to be.

For example, scrolling down on a page might receive a score of two points, while signing up for an email newsletter might receive 20 points. All the other actions — video views, file downloads and so on — received their own score. The actual point value assigned isn’t too important; it’s the relative value of each action that is key.

This may sound simple at first, but as we began to explore this new model, there were a lot of questions. For instance, who gets to decide how many points a certain action gets?

This was a bit of a loaded question — when you change the definition of “success,” content owners may be impacted in different ways. Some will suddenly see their content as performing “better,” while others may discover their content is suddenly performing “worse.”

To navigate this issue, we realized it was crucial to ensure that the GTC’s various data consumers were comparing their content to relevant, applicable benchmarks. For example, under our new methodology, a content owner with tons of video content would suddenly be scoring a lot of points. Meanwhile, a blog owner — whose content is simply meant to be scrolled through — may not be scoring nearly as many points.

It was crucial to make sure everyone realized they wouldn’t be compared to dissimilar, irrelevant benchmarks.

The benefits

With that issue sorted out, our new weighted measurement of engagement started to show its key benefits. We realized quickly that pages that performed equally well under the old binary methodology suddenly looked quite different when using the weighted approach.

For example, 40 percent of pageviews might have resulted in “engagement” previously, but now we were seeing that the actual number of points accrued per pageview might be quite different. This showed that while the same proportion of users were generating some kind of engagement, one page was doing a much better job than the other of generating the specific types of engagement that were most desired in that context.

The “aha” moment: Content that appears to perform similarly under the old methodology turns out to be performing very differently when using a weighted approach to engagement.

We also found we had a much better ability to drill into engagement to see what kinds of actions were driving the “points” that were being tallied up.

For example, if a site section were generating 25 points per pageview, we’d easily be able to “unpack” those points to see where they were really coming from. Was it all from page scrolling? Were people ignoring the PDF downloads that we really wanted them to complete?

This added specificity in measuring engagement, and the ability to measure engagement as a continuous variable rather than a discrete variable made web analysts happy and is yielding better, more targeted optimization recommendations across the organization. However, global brands don’t necessarily make big decisions based on a new KPI cooked up by the analytics people.

To get buy-in for our new engagement methodology from the highest levels, we had to go the extra mile and show that measuring engagement this way would actually let the GTC correlate web KPIs with more traditional brand data, generated with tools like on-site surveys.

Fortunately, we’ve been able to do that as well, demonstrating that our new web engagement metrics are strongly correlated with people’s perceptions of the brand. This means that we have a brand-new way to measure engagement which not only keeps the web analysts happy but also is internally consistent with critical brand measures.

Global brands have unique challenges when it comes to analytics — particularly when they are managing content-heavy sites that don’t have a lot of conversion activities happening. Measuring “engagement” makes sense as a fall-back option in these cases, but oftentimes, the need for scale leads to simplified measurement. This, in turn, leads to unclear reporting, fuzzy optimization recommendations and a general sense that there must be something better.

However, it’s possible to build a solution that scales and provides a nuanced, meaningful understanding of engagement on a site. Solutions like the one I’ve outlined above may help you move from measuring engagement as an “excuse” to clearly understanding success on your websites.

Opinions expressed in this article are those of the guest author and not necessarily MarTech. Staff authors are listed here.

Related stories